A history of ICT: Selected highlights

Computing as a profession is so young that it is instructive to talk about how we got to where we are today. Our beginnings were with Unit Record machines — which is what data processing equipment was called before computers came along and became popular in the business community. The first section will discuss how data was processed using unit record machines, and chronicle the transition to computer data processing. We’ll then take a look at hardware development over the past 50 years, the micro computer and its life cycle, and also the Internet and its heritage.

Unit record

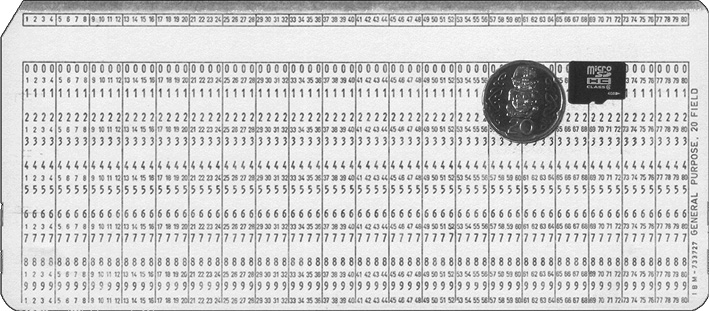

Fifty years ago, punched cards were the medium for storing data. There were many purpose built machines that had to work in concert for organizations to process data and produce reports and printed outputs of various descriptions. The equipment was referred to as the unit record, where each function was performed using a single purpose built machine (unit).

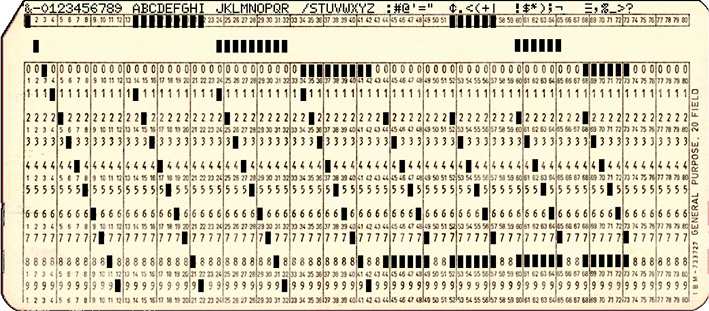

Numbers, alpha and special characters punched.

Consider the steps that were required to process a simple payroll. Data had to be punched into cards. The cards measured 82 × 187mm, and if stacked vertically, 150 cards per 25 mm. Each card could hold up to 80 characters. An example of one of these cards is shown on the previous page.

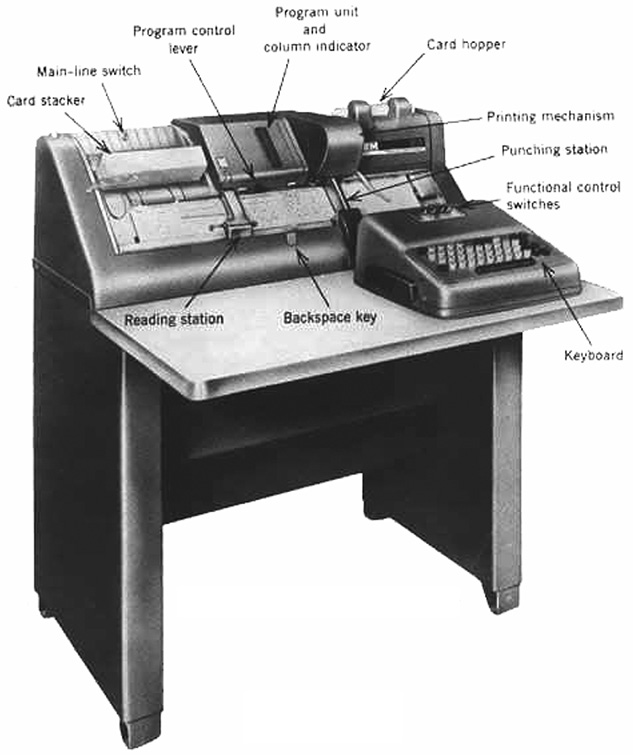

International Business Machines (IBM) manufactured various card punching devices; the most popular of them being the 026 Keypunch. Machine operators used the 026 to punch data into the cards. A companion device, the IBM 056 Card Verifier machine, looked and operated almost identically, but in the 026 there was a punch station and in the 056 there was a contact station (where an electronic contact would be made for a keystroke instead of punching the card). In order to reduce mistakes, the punched cards were kept in order, and then a verifier operator keyed the exact same data using the pre-punched cards. If the verifier operator keyed the data and did not get a valid contact, the machine would stop and notch the top of the card at the point where an error was detected. The operator would either fix it or have someone else fix it. Information entered was thereby validated to a certain extent. If it was punched wrong on the first pass and punched wrong on the second pass, then incorrect information got into the system.

IBM 026 Keypunch.

Courtesy IBM

So, in our payroll example, the employee time cards would have to be punched reflecting the days and hours worked, based on when they clocked in and out. Other data had to be punched as well. In this example there are two separate kinds of data. First is the production data. Each operation performed by an employee produced a number of pieces that were recorded on a purpose pre-printed card. The second was the amount of scrap produced for that operation. That data was punched on a different pre-printed card. They, too, had to be verified.

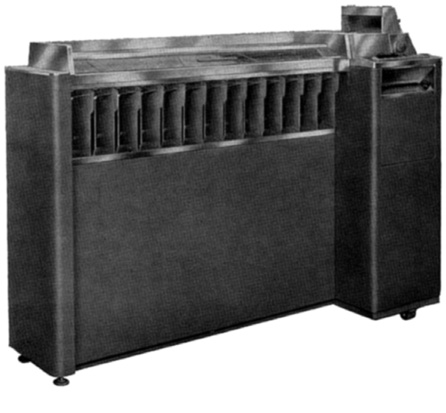

Once all of the payroll cards had been punched, they had to be sorted into order — usually by employee number and card type. Normally, the time card for each employee preceded the production card, and was followed by the scrap card. Each type was identified by a single card type code punched in the cards. Typically, the cards would be sorted one column at a time, using an 083 or 084 sorter (these could sort at 1000 or 2000 cards per minute respectively). These were purpose built machines, designed to sort. The sorting function is a combination of machine and person — thus leaving the chance for the operator to mishandle the cards at any point, resulting in the deck of cards being out of sequence (described by the term: crossing their hands — during the sorting operation cards were read by the sorter machine one at a time and dropped into any of thirteen pockets based on the what was punched into the sorting column. The operator would then pull the cards from each pocket in order and perform a sort on the next column. If the operator had a lapse in concentration they could mishandle the cards and they would not be in the correct sequence). Because of that possibility, the next step was to sequence check the cards.

IBM 083 Sorter.

Fifty years ago, data was processed in sequence, meaning that the decks of cards were in order by a given field. For example a payroll file would likely be ordered by employee number. If the cards were not in the proper order, unpredictable and undesirable results would occur. Therefore, decks of cards were sequence checked.

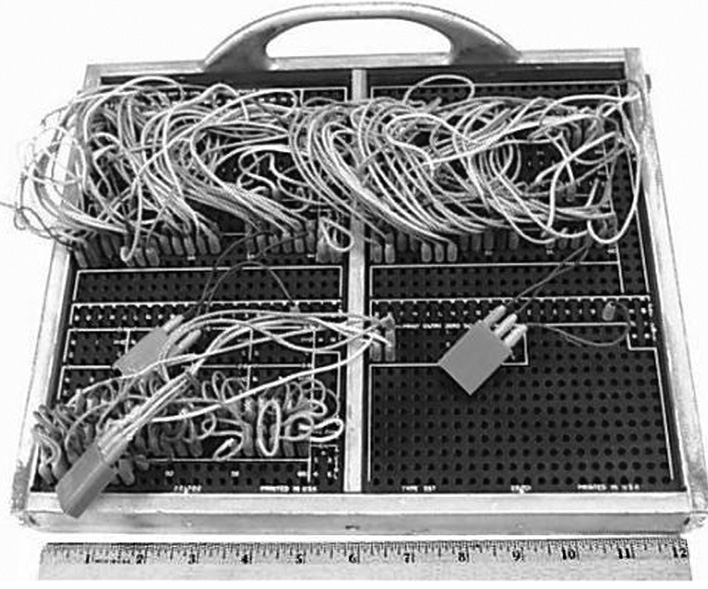

IBM S19 reproducer plugboard.

Sequence checking was performed on another purpose built machine — the 085 or 088 collator (these could operate at up to 480 and 1300 cards per minute respectively). This machine incorporated a plug board that acted as a program for the machine, dictating which operations were to be performed and on which fields (a field is defined as a series of two or more contiguous columns). As these became more complicated, they were labelled and enclosed in a metal cover to protect the wires. Unit record machines, with the exception of the sorter, were controlled by a plug board designed for the specific machine type.

After sequence checking, the cards were usually merged with the employee master cards (the collator could sequence check and merge in the same run). The master card normally held pay rates for the various functions of individual employees, as well as tax information, and year to date summary amounts for gross, tax, and the like.

These cards would then go to the calculating punch (the 602 or the 604 machine set). This function required two machines — the calculator and the punch, and each had its own plug board, specifically wired for that single operation. Data on the various card types in concert with the employee master card would be calculated and each card type would have various results punched in them. We’re almost there!

Then, finally, it was time to print reports and summarize employee activity (punching a new employee master file to be used for the next cycle). This was done at an accounting machine (the 402 or 407 operating at speeds of 80 to 150 cards per minute depending on the activity) which, for this example, would have had a summary punch attached. Once again both machines had to have their individual plug boards wired for that specific task. These machines could print up to 132 characters per line. They could add and subtract and accumulate totals, but could not make any other calculations.

There were other specialised machines not specifically shown in this example. Two of these were the 519 reproducer and the 557 interpreter. Any time you wanted to make a copy of a deck of cards, the reproducer would do this at 150 cards per minute. It could make an exact duplicate, or its plug board could be wired to selectively copy from one place on the original to a different place on the copy. The interpreter was used to print at the top of a card, from information already punched in the card. These printed cards, as in the example, would have been the time card, the production and scrap cards, before they were actually used by an employee.

Using these techniques, we were able to perform payroll, shop scheduling, inventory control, general ledger and many other normal administrative functions. However, as you can see, these operations were time consuming and fraught with opportunities for error. For example, sorting 3500 cards on a four column field typically took 35 minutes.

Computer origins

The origin of the first computer depends on who is writing the history and from which country. If you ask a German, they may say that the Zuse Z3 was the first computer; if you ask a British person, they may say that Colossus was the first computer; and if you ask an American, they may say that ENIAC was the first computer. Each can qualify their assertion by citing features that their machine had that others did not. The Zuse Z3 became operational in May 1941; Colossus in December 1943; and ENIAC in February 1946. The fact remains that electronic computing had its beginnings in the 1940s, and this stemmed from military research. It wasn’t until the late 1950s that computers became integral to business data processing.

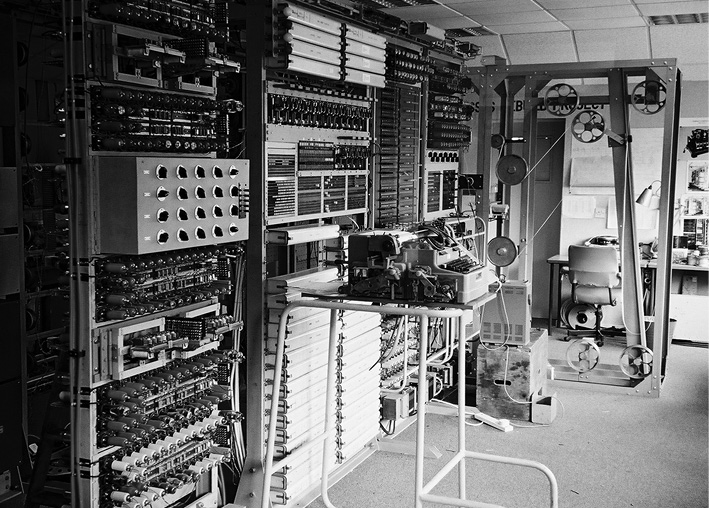

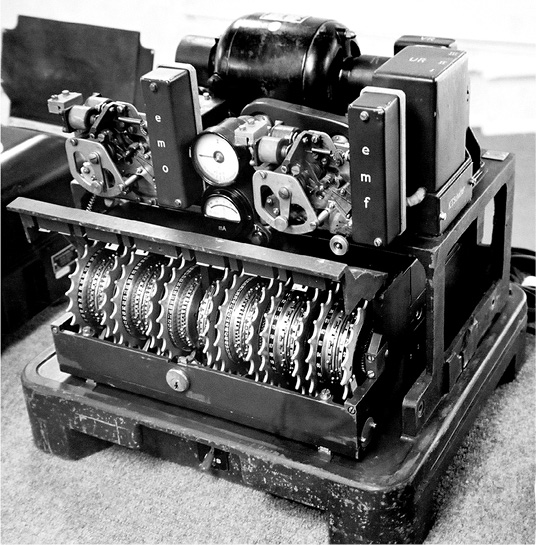

The rebuilt Colossus #9 at Bletchley Park (above), and Field Marshall Kesselring’s Lorenz (below).

The Zuse Z3 was electro-mechanical and was based on telephone relays. It was program controlled and used binary numbers. ENIAC and Colossus were both electronic and program-controlled and were based on vacuum tubes (valves).

Of the three, only Colossus made a difference to the outcome of World War II, and that contribution was significant. It was purpose built to derive the tooth patterns of the twelve wheels of the German High Command’s Lorenz Geheimschreiber — a cryptographic machine. In fact, the contribution was so significant that everything about Colossus was kept top secret for 30 years after the last of the ten Colossi was dismantled in 1945. Colossus #9 was rebuilt by Tony Sale at Bletchley Park (located in England halfway between Oxford and Cambridge) a few years ago, and in November of 2007 it was pitted against a Pentium 2 to solve a cryptanalysis problem. In that test the two machines’ performance was equivalent.

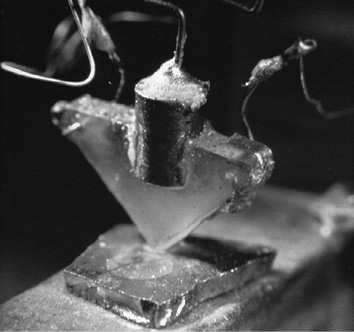

The first transitor.

Over the intervening years, many different computer companies emerged and disappeared. The invention of the transistor by William Shockley in 1947 and the development by Jack Kilby of the first integrated circuit in 1958 unquestionably contributed to the development of smaller, faster computers. Intel’s creation of the 4004 (the first microprocessor) set the stage for the evolution of the microprocessor, which we take for granted today.

Computer evolution

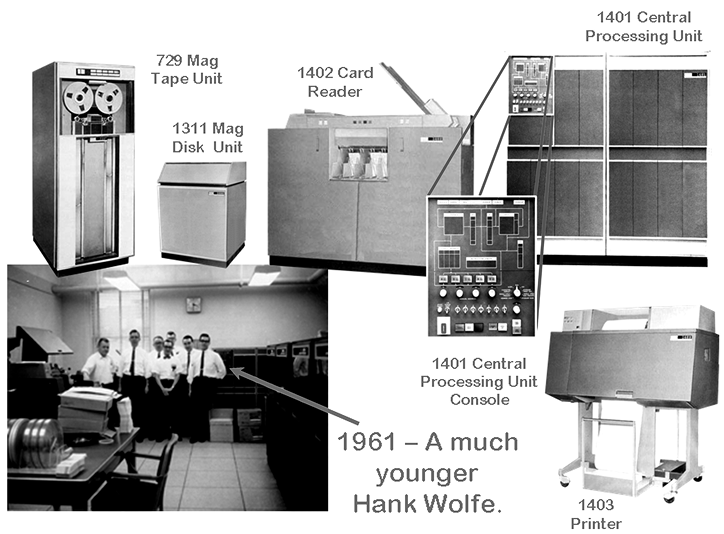

There were many computer manufacturers in the early years of computing. Names like Univac, Honeywell, RCA, Control Data Corp, and many more have come and gone. The most successful and affordable family of business computers was introduced in 1959. It was the IBM 1400 series of data processing systems, with six different models. The notion of ‘family’ provided an update path as users’ needs expanded. More than 10,000 units of the 1401 model were produced. In those days, computers were leased, not sold, and the user would pay minimally (based on configuration) $2500 to rent plus a fee for CPU usage.

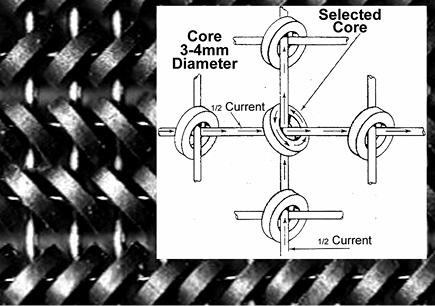

Magnetic core memory.

The first configurations available in this model consisted of 1.2 kilobyte 8-bit core storage — ranging up to 16kilobyte. Core storage, or more properly, ferrite-core memory, was an early form of computer memory. It consisted of small magnetic ceramic donut-shaped rings, or cores. A bit was represented as on or off based on the polarity of a single core.

The initial system required three separate machines; the 1401 Central Processing Unit, the 1402 Card Reader, and the 1403 Printer. Computers can only understand and execute machine language programs. This language is primitive, cumbersome, and difficult for human understanding. To make the creation of computer programs easier, a number of tools were created. Assembly language was first devised to translate a mnemonic code written by humans into machine executable code. Later on came compilers and interpreters that translated more English-like commands into assembler, which in turn had to be translated into machine language before it could be executed. The IBM 1401 initially used machine language only, but the customer engineers developed the Symbolic Programming System — an assembler.

IBM 1401 system.

With this simple tool set, organizations were able to replace all of the functions of the unit record equipment. Symbolic Programming System was the first assembler developed for the 1401; Autocoder, also an assembler, soon followed. These made programming the 1401 easier. Other programming languages eventually followed, such as RPG, FORTRAN and COBOL.

In addition to the initial configuration, the IBM 729 magnetic tape units could be added and subsequently, the IBM 1311 Magnetic Disk Unit became available. The initial 1311 had removable disk packs, which had 14 inch platters with ten recording surfaces capable of storing up to two megabytes of data. The disk units allowed random access processing. Prior to that, processing data had to be done sequentially — in order — one record after another. Random access facilitated reading any given record directly via its unique identifier and a physical disk location address, without first having to read sequentially through all of the preceding records.

The next family of computing was the IBM 360 System. This series brought with it new ideas. Previously, it was stated that the monthly cost was based on CPU usage. As earlier machines were used, it was noticed at the end of each month that the number of CPU seconds actually used was only a fraction of the number available — anecdotally, from 20–30% utilization.

As it turns out, the computer was almost always waiting for an input or output operation (I/O) to finish before it could proceed to the next processing task. The idea of multi-tasking was born and the IBM 360 incorporated this into its disk operating system (DOS). This meant that up to three different programs could be operational at any given moment. Memory was partitioned into three discrete addressable segments (the background or batch processing partition, and optionally one or two foreground partitions). Each program was loaded into one of the three segments. As an I/O operation was initiated by the currently active partition, program control was transferred by the controlling program, the Supervisor, to the next partition. Operations in that partition were executed until it required an I/O operation, and so it continued rotating between partitions. This small change dramatically improved throughput. Later operating system versions removed the restriction of three partitions.

The IBM 360/67 model was designed especially to provide this capability, and is an early example of time sharing. This technique allowed several users to attach to the computer on an interactive basis through a teletype terminal and interact with the computer as if it were dedicated to their exclusive use. It was described at the time as each user having control of virtually the whole machine and all of its resources — a virtual machine. This was not a simulated machine as we think of virtual machines today; users controlled the real computer. This worked essentially like the partitioning process except that the rotation between users was based on discrete time slices. Each user had an equal or priority slice of time. Control was transferred slice by slice — between users. The performance of the virtual machine to each user was based on the total number of users and the amount of resources required by each. This technique opened up the possibility for users connected to the same machine to communicate with each other — a local area network (LAN) of sorts, although not in the way we understand LANs today.

Micro-computer origins and evolution

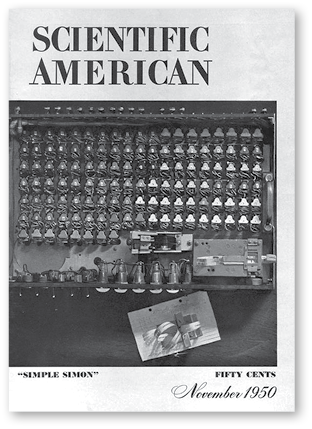

The idea of a home or personal computer is not as recent as one might assume. In the November issue of Scientific American, 1950, the cover story was about Simple Simon — a primitive personal computer. This machine made use of 128 relays with various groups of them used for specific functions. The rectangular tilted device at the bottom of the picture was the paper tape punch. The rectangular device just above and to the right is a paper tape reader used for input. The five lights next to the paper tape devices provided the answers to problems put to Simple Simon.

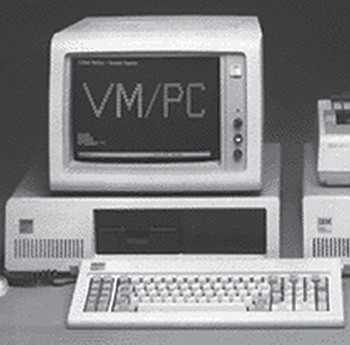

The IBM PC/G.

During the intervening years between Simple Simon and the introduction of the IBM PC/G many different personal computers were manufactured. Names like Altair, Atari, Apple, Commodore, Sinclair, and many more were common up until that time. However, these were mostly thought of as game machines and generally they did not have much business credibility. Use of personal computers for business was minimal. With the introduction of the PC/G in 1981, IBM brought its substantial business reputation, service and support into play. The business sector soon recognized the business potential of the PC/G. One of the principal features that IBM incorporated into the PC was an open architecture. That meant any vendor could produce a device and attach it to the IBM PC, as the required information about the design interfaces was freely available. The preceding crop of PCs had provided few choices for attaching devices. The introduction of a few key ‘killer’ applications like word processing and spread sheet, for example, further enhanced the usability and credibility of PC business use.

Since the IBM PC/G’s introduction, phenomenal strides have been made in price, performance, and usability of PCs. They are many thousands of times more powerful today than that first generation machine, and the technology used in PCs is being used in just about every environment.

ARPANet and its evolution to the Internet

As early as 1960, Joe Licklider, a man who had a profound influence on the development of the Internet, said:

It seems reasonable to envision, for a time 10 to 15 years hence, a ‘thinking center’ that will incorporate functions of present-day libraries together with anticipated advances in information storage and retrieval. The picture readily enlarges itself into a network of such centers, connected one to another by wide-band communications lines and to individual users by leased-wire services. In such a system, the speed of the computers would be balanced, and the cost of the gigantic memories and the sophisticated programs would be divided by the number of users.

Joe Licklider was hired in October of 1962 as director of the Information Processing Techniques Office — a department within DARPA (Defense Advanced Research Projects Agency). His mandate was to find a way to realise his networking vision. The implementation of this vision into what has become known as ARPANet is attributed to the cooperation, hard work and genius of many individuals. Laurence Roberts was the ARPANet program manager, and led the overall system design to create the fault tolerant system called ARPANet.

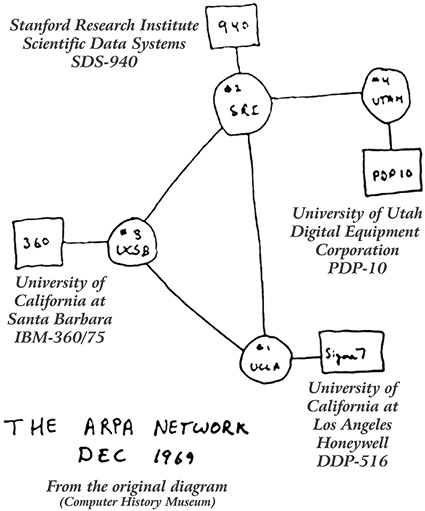

The initial network was drawn on a cocktail napkin (an annotated version is shown over the page). It consisted of four nodes. The first two hosts to connect were at the University of California at Los Angeles and Stanford Research Institute in October 1969. The University of California at Santa Barbara and the University of Utah completed the original ARPANet by December of the same year.

There have been many milestones over the intervening period. The first email was sent by Ray Tomlinson in 1971, a file transfer protocol (FTP) was published in 1971, other network protocols were developed and TCP in 1974 evolved into TCP/IP in 1976, and various search engines evolved (such as Archie, Gopher, Jughead, and Veronica). The World Wide Web was born when Tim Berners-Lee developed HyperText Markup Language (HTML) that would run across the Internet on different operating systems. This was modified by students at the University of Illinois, and Mosaic was born. This browser included features like icons, bookmarks, an attractive point and click interface, and pictures that made surfing the Internet attractive to ‘non-geeks’. From its inception, ARPANet expanded and grew until today there are more than 625 million hosts attached. Of course, the name has changed to the Internet. ARPANet itself officially shut down in 1990.

During the past 50 years there have been many inventions, innovations and progress made in computing — entire books have been written about small parts of these. In this chapter the author has deliberately chosen a few milestones that have made a difference — based on his experience. Another author may have chosen differently.

Comparative storage media.

In the first couple of paragraphs, the steps for processing data were described. Today those same functions are still being performed, albeit by a single machine. Most computer users do not even think about and some do not know about all of these functions, as they seem to happen seamlessly. Consider this: the four gigabyte flash card shown below is capable of storing the equivalent of a stack of 80-character punched cards 8.5 kilometres high. There are computers today that are capable of performing hundreds of trillions of calculations in less time than it took a person to make a single calculation on their slide rule in 1959. And we’ve only just begun.

Dr Henry Wolfe has been an active computer professional for more than 50 years. In 1979 Dr Wolfe took up an academic post at the University of Otago, where he is an Associate Professor in the Information Science Department. Since 1987 he has specialised in computer security, earning an international reputation in the field of electronic forensics, encryption, surveillance, privacy and computer virus defences. Dr Wolfe writes about a wide range of security and privacy issues for Computers & Security, Digital Investigation (where he is also an editorial board member); Network Security, the Cato Institute, Cryptologia, and the Telecommunications Reports. He is a fellow of the New Zealand Computer Society. He is also a member of Standards New Zealand SC/603 Committee on Security, a member of the New Zealand Law Society’s Electronic Commerce Committee, and was on the board of directors of the International Association of Cryptologic Research, finishing up in January 2003.

References

Augarten, S (1983). State of the art: A photographic history of the integrated circuit. New Haven, Connecticut: Ticknor & Fields

Berkeley, E (1950). Simple Simon. Scientific American, 183, 40–43

Columbia University. Unit Record, from www.columbia.org/history/

Computer History Milestones. The computer history museum, from www.computerhistory.org

Fierheller, G (2006). Do not fold, spindle or multilate. Marklham, Ontario: Stewart Publishing & Printing

Gannon, P (2006). Colossus: Bletchley Park’s greatest secret. London: Atlantic Books

Licklider, JCR (1960). Man-computer symbiosis. IRE Transactions on Human Factors in Electronics, HFE-1(March), 4–11

Mosaic (n.d.). About NCSA Mosaic, from www.ncsa.illinios.edu/Projects/mosaic.html